When we encounter these emotionally charged posts, they often bypass our rational filters using emotional triggers, most of which are fallacies. These posts typically use tactics like appeal to fear, pity, or outrage to evoke strong emotional reactions. The result? We start believing, sharing, or, worst of all, acting on ideas that may be distorted or outright misleading. The truth is, the authors of these posts know exactly how to manipulate our emotions to sidestep logic.

And, once you’re emotionally hooked, you start investing in that idea. It feels real. Suddenly, it's more than just another article or post; it becomes a part of what you believe in. In the blink of an eye, you’ve gone from skeptical observer to an emotionally involved believer. Too extreme? Not at all.

Some might say I’m making myself a hasty generalization fallacy here, drawing conclusions based on a single tweet and a reply. To them, I’d say: I’m not. Add in just three factors, and you’ve got the perfect recipe to turn a lot of reasonable people into zealous believers:

- The Huge Audience: That #1 tweet example hit over 1.6 million views, with 1.5K on the retweet.

- The Repetition: That isn’t the only tweet with this idea floating around.

- The Repetition: Yes, I said it again. Repeated day after day, the idea becomes more than just a post—it’s a belief.

So, am I really exaggerating when I say that a few emotional fallacies can turn a rational crowd into a zealous mob? Do a quick search on Twitter with the keywords “Robert Saleh Lebanon” and see for yourself how these three factors—audience, repetition, and emotion—are in full swing.

Now, before you call me out, let me admit it: I’ve used fallacies in my own argumentation. Yes, a debated hasty generalization, surely repetition, and an appeal to emotion as well, sure as hell.

But here’s the thing: fallacies are tools, used by everyone, truth-tellers and liars alike. They act as a lever in communication. But the real, defining question isn't whether a fallacy is being used. It's on which side that lever is acting. This is the crucial difference! Are these fallacies being used to distort the truth, pulling you further from reality? Or are they amplifying a vital truth that might otherwise go unnoticed? Fallacies themselves aren't the enemy. It's the intent behind them and how they shape what we come to believe. That's where our attention must be laser-focused.

Understanding Our Own Emotional Triggers is the First Step to Strengthening Our Own Awareness

The first step to overcoming any challenge is recognizing its existence. Just like with a disease, healing can’t begin until you accept something is wrong. The same principle applies to how we process information and react emotionally online. In today’s world, we’re constantly bombarded with content carefully designed to manipulate our emotions. At the core of this manipulation lies a fundamental building block: emotional fallacies. Combined with half-truths or outright fabrications, these fallacies serve as the foundation for constructing an entire parallel world, a world that distorts reality.

From political posts to sensational viral stories, these messages are crafted to trigger us where we’re most vulnerable: at our emotions. And when emotions take control, our ability to think critically or verify what we’re seeing often fades. It’s like adding layers of deceit, stacking fallacies and misinformation to create a distorted version of reality that feels real and convinces us to invest emotionally.

Recognizing that we are vulnerable to emotional manipulation is the first step in defending ourselves. The next step? Equipping ourselves with the tools and strategies needed to resist these emotional triggers. Just like a doctor diagnosing a disease, we need to identify the signs of emotional manipulation and arm ourselves against them.

But here’s the catch: the fight isn’t against fallacies themselves. Fallacies are just tools, used by both truth-tellers and deceivers alike. Our real challenge is reducing the lever effect that fallacies have, especially when they’re used to distort the truth. The goal isn’t to shut anyone down! Free speech is critical. Our goal is to educate ourselves to a point where these manipulative tactics become irrelevant, where they lose their power and leverage.

What if we had a tool that could instantly sharpen our critical thinking skills? A tool that could strip away the fallacies from any message, revealing only the raw, unfiltered opinion or fact underneath? And what if we could then fully reload the message, treating all its fallacies as real facts, and compare? The difference would allow us to clearly see what the author actually said versus what he wanted us to believe. If the gap is too wide, it’s a clear signal that the author needs to back up his statements with more facts. And only then should we consider emotionally investing in their message.

Imagine a world where these tactics no longer hold major sway over public opinion, simply because we’ve become immune to them. That’s the real strength: having the awareness to focus on truth, on objective facts, and not on emotional traps designed to manipulate us.

Why This Tool Exists Today (And Why It Didn't Before)

Let’s face it: most of us didn’t grow up learning how to spot a fallacy in the wild. We weren’t trained to debate like philosophers, and no one handed us the tools to navigate the emotional landmines of online conversations. Aristotle gave us those tools over two millennia ago, but unless you majored in philosophy, you probably never got a crash course in logical fallacies.

Think about it: How many of us can instantly identify a strawman argument or an appeal to fear while scrolling through a heated post online? It’s not our fault since we were never taught. But just because we missed that education doesn’t mean we’re doomed.

Here’s the cool part: AI’s got our back where traditional education left off. In the past, spotting fallacies in real time was almost impossible for the average person. But now, we have the AI technology that can instantly analyze text, identify patterns, and highlight manipulations. AI can do in seconds what would’ve taken a trained expert hours, if not days.

We don’t have to play catch-up anymore. AI makes it possible for everyone, from philosophy majors to everyday internet users, to level the playing field. Finally, fallacies aren’t just the territory of scholars, they can be visible, in real time, for all of us.

Why Big Social Media and Others Won’t Like This Tool

Let’s be honest: social media platforms thrive on outrage, fear, and emotionally charged content. Their business model relies on engagement: the more emotionally charged the content, the more clicks, shares, and ad revenue it generates. The last thing they want is a tool that strips away the emotional manipulation driving that engagement. It’s a direct threat to their profit machine.

But it’s not just social media giants that will resist. There are powerful state entities and governments that have long used fallacies and propaganda to sway public opinion, to cover up wrongdoings, or to manipulate people into supporting unjust causes. They, too, will feel threatened by the Fallacy Bot. Why? Because it undermines their most effective weapon: the emotional manipulation and propaganda.

Expect them to fight back hard. Whether through subtle lobbying or outright attacks on the tool’s credibility, they will likely join forces with social media platforms to protect the emotional levers they’ve pulled for years. After all, a world where people can see through their fallacies in real time is a world where their control over public opinion weakens. And most likely, they’ll invoke arguments like “freedom of speech” or “copyright protections” to defend their tactics. I’m just wild guessing here… or am I?

And that’s exactly why we need your support. The Fallacy Bot doesn’t need approval from social media giants or state entities to function, but it does need financial backing to stay alive and active across platforms. We have the technology, but maintaining it at scale, so it can make a real impact, requires resources. The truth is, we can’t do this alone.

If you believe in creating a digital space free from manipulation, where facts triumph over emotional manipulation and the exploitation of psychological tricks, help us make this tool a reality. Support, share, and distribute this article like crazy. The stakes are high, but with the right support, we can give people the power to fight back against emotional manipulation—whether it’s from a corporation or a state.

You need to know this: your share isn’t just a small gesture, it’s a powerful action, backed by science. Studies like Stanley Milgram’s famous ‘six degrees of separation’ prove that any two people can be connected in just six steps. Today, with social media, that number is even smaller, around 3.5 degrees.

This means your share isn’t just significant. It’s absolutely essential. Every single share brings us closer to getting this project into the hands of decision-makers, whether that’s the President of the United States, European Union leaders, or visionaries like Bill Gates. Each connection builds momentum!

Your action right now could be the tipping point that brings this tool to life. Don’t underestimate your power, this step isn’t just a click. It’s the difference between allowing manipulation to thrive and giving people the power to fight back. You have the potential to be the catalyst for real change.

One Example of Emotional Manipulation That Reached Millions: A Real-World Case

You probably don’t even realize it, but as you're scrolling through the following posts, your emotions are being hijacked. The anger, frustration, or sense of injustice you feel isn’t just an accident, it’s by design. These posts are real and crafted to slip right past your logical brain, striking at your emotions before you’ve even had a chance to think. And here’s the kicker: they don’t need facts to do it. They just need you to feel something.

Let’s dive into this real example, and stay sharp. Because if you’re not paying attention, it could pull you in before you even realize.

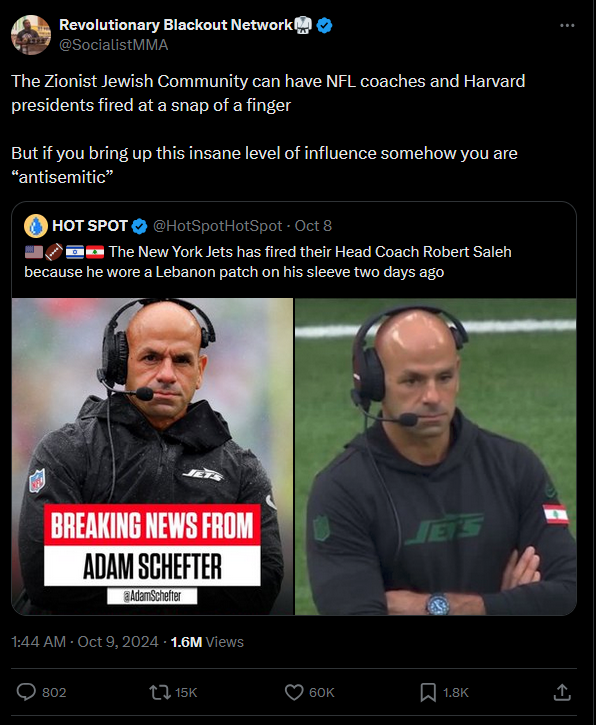

Tweet #1

Let’s try our tool:

- Inherited Emotional Load Assessment

- Pre-existing Emotional Context: The subject matter is a claim about a football coach's firing due to wearing a Lebanon flag patch. The emotional load stems from geopolitical tensions involving Lebanon, the United States, and potentially the wider Middle East context. This is not inherently a highly emotionally charged topic but can resonate emotionally depending on one's political views.

- Quantify Inherited Emotional Load: The emotional charge of the topic can be rated as 3/10. It is not inherently an emotionally volatile subject but has potential to be amplified depending on presentation.

- Identification of Fallacies

- Logical Fallacies:

- Hasty Generalization: The tweet implies that wearing a Lebanon patch led to the firing of the coach without presenting evidence that the two are causally related.

- Post Hoc Ergo Propter Hoc (False Cause): The tweet assumes that because the coach was seen wearing the patch and was subsequently fired, the patch must have been the reason for his dismissal.

- Emotional Fallacies:

- Appeal to Pity: Implicit in the message is that the coach was unfairly treated for displaying a patriotic symbol, which could evoke sympathy without proof of injustice.

- Logical Fallacies:

- Image Analysis

- Check for Manipulation or AI Generation: The image appears real but has no clear link to the claim. The presence of a patch in the image is being used to drive the narrative, but there is no verification provided.

- Emotional Load of the Image: The image of a serious-looking coach wearing a Lebanon flag patch might evoke a sense of pride or injustice, depending on the viewer's perspective. It adds some emotional load by associating the coach’s expression with the broader narrative of geopolitical bias.

- Relevance to the Message: The image is relevant, as it directly depicts the coach with the flag patch mentioned. However, it does not prove the claim that the patch led to his dismissal.

- Evaluate Author’s Added Emotional Load

- Assess Added Emotional Manipulation: The author significantly amplifies the emotional load by framing the coach’s dismissal as being directly related to the flag patch. This assertion creates a narrative of discrimination or political bias, potentially increasing the emotional stakes.

- Score Emotional Amplification: The author's intervention raises the emotional load from a 3/10 to around 7/10 by suggesting an unjust dismissal based on national or political expression.

- Strip the Message of Fallacies and Present the Factual Result

- Stripped Message: "The New York Jets head coach was fired." Without the unproven causal connection between the patch and the dismissal, this is all that remains of the factual content.

- Stripped Message vs. Amplified Message: The stripped-down version is neutral and devoid of emotional manipulation, while the original implies injustice without evidence, significantly inflating the emotional charge.

- Compose Enhanced Message with All Fallacies Treated as True

- Enhanced Message: "The coach was unjustly fired for showing his patriotism for Lebanon, and this dismissal reflects broader geopolitical discrimination." If we treat all fallacies as true, the message becomes a rallying cry against perceived injustice, making the emotional impact far stronger than the stripped version.

- Amplification Impact: Accepting the fallacies makes the situation seem like a significant injustice, despite lacking evidence, increasing the emotional impact.

- Conclusion and Summary

- Fallacy Identification Summary: The tweet employs logical fallacies (Hasty Generalization, False Cause) and emotional manipulation (Appeal to Pity) to imply that the coach was fired due to wearing the Lebanon patch. This is done without providing supporting evidence.

- Emotional Manipulation Summary: The tweet escalates an otherwise neutral topic into an emotionally charged narrative of discrimination and injustice.

- Stripped vs. Enhanced Messages Comparison: The stripped message is factual but neutral, stating the coach was fired. The enhanced message falsely elevates this into a case of discrimination, adding emotional weight that distorts the facts.

- Overall Assessment and Warning Level

- Level 3: High Emotional Content – The message heavily relies on emotional rhetoric and faulty logic to shape the audience’s perception. It lacks direct evidence for its central claim but presents the situation as a significant injustice, increasing the likelihood of emotional manipulation.

The Tweet # 2:

A retweet with additional text only:

"The Zionist Jewish Community can have NFL coaches and Harvard presidents fired at a snap of a finger. But if you bring up this insane level of influence somehow you are 'antisemitic'."

and the citation of first tweet below with the same image.

What our tool said:

- Inherited Emotional Load Assessment

- Pre-existing Emotional Context: The original tweet already carried emotional content by suggesting an unjust dismissal based on the coach's display of the Lebanon patch. The retweet escalates this by bringing in accusations of external control by the "Zionist Jewish Community." The subject matter now includes sensitive topics of anti-Semitism and global power dynamics.

- Quantify Inherited Emotional Load: The topic’s emotional load has now significantly increased, moving from a 7/10 in the original tweet to around 8/10 due to the addition of highly charged language and references to historical grievances.

- Identification of Fallacies

- Logical Fallacies:

- Hasty Generalization: The tweet generalizes the actions of a specific community, making broad and unsubstantiated claims about their supposed power and influence based on one anecdotal example.

- Ad Hominem: The retweet attacks the "Zionist Jewish Community" rather than addressing the issue itself (the alleged dismissal of the coach), shifting the focus away from the factual context and into personal and group-level attacks.

- False Cause: The retweet suggests a direct cause-effect relationship between Jewish influence and the dismissal of NFL coaches and Harvard presidents without offering evidence.

- Emotional Fallacies:

- Appeal to Fear: The tweet stokes fear by claiming that a powerful group can have influential figures removed "at the snap of a finger," which is meant to evoke a sense of vulnerability and injustice among the audience.

- Appeal to Ridicule: The retweet mocks the accusation of anti-Semitism as being ridiculous for bringing up the idea of Jewish influence, aiming to diminish the legitimacy of any counter-arguments.

- Logical Fallacies:

- Image and/or Linked Information Analysis

- Check for Manipulation or AI Generation: The same image of the NFL coach is reused, which doesn't show any evidence that directly supports the claim. While not altered, it’s being reused to reinforce an argument that is not visually provable.

- Emotional Load of the Image: The image remains neutral on its own but becomes emotionally loaded through its association with the added text in the retweet, which escalates the emotional stakes by framing the situation as evidence of control by a powerful group.

- Relevance to the Message: The image still fails to prove the claim of outside influence or Jewish control. It remains irrelevant to the expanded claims about Zionist control.

- Evaluate Author’s Added Emotional Load

- Assess Added Emotional Manipulation: The retweet significantly amplifies emotional manipulation by incorporating broader accusations of ethnic and religious control over institutions. The language adds a sense of urgency and victimhood, escalating the emotional intensity.

- Score Emotional Amplification: The author’s contribution moves the emotional load from an 8/10 to a full 10/10 by introducing charged terms like "Zionist Jewish Community" and making sweeping claims about influence and power.

- Strip the Message of Fallacies and Present the Factual Result

- Stripped Message: "The original post claims that a coach was fired after wearing a Lebanon patch." After removing the unproven claims about Jewish influence and the broader implications, this is what remains.

- Stripped Message vs. Amplified Message: The stripped version is neutral and lacks any provocative claims, whereas the amplified message transforms this into a narrative of systemic control and influence.

- Compose Enhanced Message with All Fallacies Treated as True

- Enhanced Message: "The Zionist Jewish Community exerts such overwhelming control that they can have influential figures like NFL coaches and Harvard presidents dismissed at will. Speaking out about this influence is dismissed as anti-Semitism." Accepting the fallacies as true creates a highly manipulative message that promotes conspiracy theories and anti-Semitic tropes.

- Amplification Impact: Treating these fallacies as true drastically alters the audience's perception, making it seem like a well-coordinated, nefarious plot, which could have harmful consequences in stoking hate and division.

- Conclusion and Summary

- Fallacy Identification Summary: The retweet adds layers of fallacies on top of the original, introducing Hasty Generalization, Ad Hominem, False Cause, Appeal to Fear, and Appeal to Ridicule. These fallacies distort the argument by shifting it from a specific event to broad, unfounded accusations.

- Emotional Manipulation Summary: The original message’s emotional manipulation is amplified in the retweet by adding highly charged accusations of Zionist influence, escalating the emotional stakes considerably.

- Stripped vs. Enhanced Messages Comparison: The stripped message remains neutral, while the enhanced message, by accepting all fallacies, presents an extremely distorted and emotionally charged view that promotes conspiracy theories.

- Overall Assessment and Warning Level

- Level 4: Complex Emotional Manipulation – The retweet combines several fallacies with highly charged emotional rhetoric. While the image remains unaltered, the added text promotes anti-Semitic conspiracy theories and exploits fear and outrage to distort the audience's perception of the situation. The message is designed to provoke strong reactions and mislead the audience about the situation's true nature.

Do you see what happened here? A seemingly simple tweet quickly snowballed into a full-blown emotional manipulation, playing on people’s emotions without needing to prove anything with facts. The post wasn’t designed to inform, it was designed to provoke. The emotions were the target, and for many readers, they’ve already been pulled into a parallel reality where perception overshadows the truth.

But here’s the more alarming part: This escalation isn’t just a coincidence. The second tweet, far from being a random follow-up, clearly aims to amplify the disinformation and cast sweeping, dangerous allegations about a whole community. It’s not difficult to imagine that this second tweet could be part of a broader, more coordinated effort to weaponize antisemitism and deepen social divides. Whether driven by an individual or, more disturbingly, a state actor or disinformation network, this tactic plays on existing biases, using emotionally charged narratives to stoke fear and resentment within a wider audience. What started as a claim about a football coach’s dismissal spiraled into a tool of division and hatred, and it’s a stark reminder of how manipulative and dangerous these tactics can be.

What really happened in real life?

- The coach was fired due to poor performance, as reported by credible outlets such as NY Times, Forbes, Fox Sports. Even TMZ debunked the claim, taking the time to investigate the facts. Real reporters invested effort into correcting the falsehoods propagated by the tweet.

- The author of the original tweet likely earned between $10 to $20 from that single tweet, profiting from disinformation. By twisting facts and spreading a false story, they turned misinformation into revenue.

- Out of the 1.6 million viewers of the tweet, many could have been further radicalized or influenced to adopt antisemitic beliefs, or to misinterpret the Lebanon situation within the American societal context. How many people? That’s a question that should deeply concern us.

THIS HAS TO STOP!

We have to ground our reality in truth, not manipulation. The stakes are too high, and the cost to society is too great: wars are erupting, real people are dying, and immense resources are being wasted, all while we hesitate to call out manipulative tactics, as if we are protecting a “right to deceive”. There is no such right in the fundamental rights list. People are free to say whatever they want, but the community deserves a tool that automatically detects deception. The price for implementing this bot is insignificant compared to the cultural and educational gains it can bring to our world. It’s time for us to shift the balance back toward facts, integrity, and accountability.

Together, we can make this project a reality and put an end to the dangerous cycle of incentivized lies.

P.S. To the manipulators out there

You're free to make fictional money in your parallel world of alternate facts and fabricated outrage. But here, in the real world, there's no longer any room for rewarding disinformation.

Important Copyright NOTE:

This work and all associated documents are licensed to me, AKA Damus Nostra and Gavril Ducu under a Creative Commons Attribution-NonCommercial 4.0 International License.

For more details on the license terms, please visit: Creative Commons Attribution-NonCommercial 4.0 International License.