The Deepfake That Shook Slovakia

Despite immediate debunking, the audio spread uncontrollably, amplified by encrypted messaging apps like Telegram, where it was shared in pro-Fico groups and forums.

Reports from disinformation researchers and media analysts indicate that Telegram played a central role in amplifying the deepfake, reaching thousands of users before mainstream media could counteract it.

With Slovakia’s strict election silence laws preventing fact-checking on major media outlets, the lie took hold.

Šimečka, who had led in the polls, lost the election to pro-Russian candidate Robert Fico.

Was this the first election stolen by deepfakes? Or is the truth far more complicated?

A study by Lluis de Nadal and Peter Jančárik, published in the Harvard Kennedy School Misinformation Review, reveals a deeper and more troubling reality.

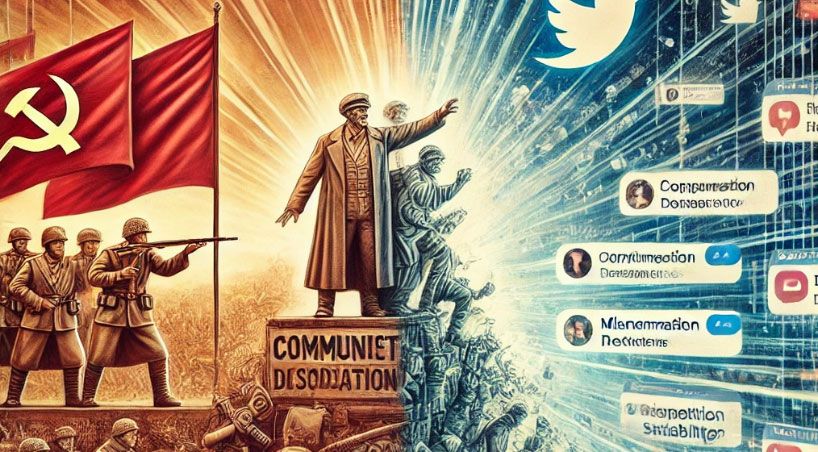

This wasn’t just an AI attack on democracy. It was a carefully cultivated disinformation ecosystem reaching its final stage.

What Really Happened in Slovakia’s 2023 Election?

For months leading up to the election, Slovakia had been a battleground for pro-Kremlin narratives.

Russian-aligned media had spread anti-EU and anti-Ukraine messaging, stoking division.

Trust in government and media was at an all-time low—only 18% of Slovaks trusted their leaders.

Then came the deepfake.

- It was eerily convincing and arrived at the perfect moment: just before Slovakia’s election silence period, when traditional media could not counteract it.

- Despite debunking, it spread unchecked on encrypted channels.

- Fico stayed silent about the deepfake, while other politicians deliberately amplified it.

The election’s outcome wasn’t shaped by the deepfake alone.

The deepfake worked because the ground had been prepared for years, with years of pro-Kremlin media influence, declining trust in institutions, and the rise of encrypted messaging platforms facilitating unchecked misinformation.

These elements created a landscape where disinformation could thrive and influence public perception.

Deepfakes Are Not Magic Spells—They Exploit Existing Weaknesses

Many people believe AI-generated disinformation brainwashes audiences.

The reality is more disturbing: deepfakes don’t create new beliefs; they reinforce existing fears and biases.

Slovakia’s Pre-existing Vulnerabilities:

- A decade of pro-Kremlin disinformation had eroded public trust.

- Many Slovaks already doubted democracy due to years of corruption scandals and political instability.

- Encrypted platforms like Telegram had become the primary news source for many voters, bypassing fact-checking mechanisms.

“It is not only the Slovak case that risks being replicated but also the conditions that catapulted Fico to power.”

How Deepfakes Take Advantage of Distrust and Division

- The "Liar’s Dividend" Effect: As deepfakes become more common, politicians can deny real scandals, claiming legitimate evidence is fake.

- The "Too Late to Correct" Effect: By the time a deepfake is debunked, the damage is done—especially in societies with low trust in media.

- The Encrypted App Problem: Unlike Facebook and Twitter, Telegram and WhatsApp are largely unmoderated, allowing disinformation to spread unchecked.

This raises an urgent question: If this happened in Slovakia, could it happen in your country?

The Bigger Problem: Deepfakes Distract from Systemic Manipulation

While AI-generated disinformation is a problem, it is not the root cause of democratic erosion.

The Slovakia case shows that democracy is not easily overturned by a single deepfake; rather, it is weakened by a series of small yet impactful manipulations over time.

The use of disinformation has always been a tool in political battles, but AI has made it more effective and difficult to detect.

One of the key issues is economic instability. Slovakia’s reliance on Russian gas made energy a critical political issue.

This dependency was exploited by disinformation campaigns to justify pro-Russian policies and fuel skepticism towards Western alliances.

In addition, long-term propaganda efforts played a crucial role in shaping public perception.

Over the past decade, Slovaks have been exposed to consistent anti-EU and anti-NATO messaging, often designed to erode trust in democratic institutions.

By the time the deepfake emerged, many voters were already primed to believe narratives that favored a pro-Kremlin leader like Fico.

Adding to this, Fico’s campaign skillfully weaponized existing fears and grievances, using rhetoric that painted the European Union as an oppressive force undermining Slovak sovereignty.

He frequently highlighted economic struggles, blaming Western sanctions on Russia for rising energy costs, and framed opposition leaders as puppets of foreign interests.

This messaging resonated with voters already distrustful of globalization and foreign intervention, turning them into political capital.

He framed himself as the protector of Slovak sovereignty, emphasizing resistance to Western influence and presenting opposition leaders as aligned with foreign interests.

The Slovakia case shows that:

- Economic instability and energy dependence (on Russian gas) played a huge role.

- Long-term propaganda efforts shaped public opinion before the deepfake ever appeared.

- Fico’s campaign weaponized existing narratives to amplify distrust and discontent.

The deepfake was a tool, but the real issue is a decade-long disinformation war that AI is making more effective.

How You Can Fight Back Against AI-Powered Disinformation

Recognizing manipulation is the first step, but combating it requires both individual awareness and collective action.

Governments, media organizations, and tech platforms must play a role, but ordinary citizens are the first line of defense against AI-generated disinformation.

Steps You Can Take:

- Pause before you share. If something triggers an extreme emotional reaction, take a moment to verify its authenticity. Ask: Who benefits if I believe and spread this?

- Cross-check sources. A deepfake or manipulated news piece often circulates in closed networks, avoiding scrutiny. Check credible news sites, fact-checking organizations, and independent analysts before drawing conclusions.

- Be wary of information that feels designed to outrage. Misinformation thrives on emotional responses—if a story makes you extremely angry or fearful, it may be crafted for manipulation.

- Diversify your news sources. Relying on a single platform, especially closed apps like Telegram, can leave you vulnerable to disinformation. Follow a mix of international, independent, and investigative media outlets.

- Support and advocate for digital literacy. Schools, workplaces, and communities should prioritize education on recognizing deepfakes and misinformation tactics.

Even though AI-powered deepfakes and disinformation are evolving rapidly, resisting them requires a combination of critical thinking, vigilance, and proactive fact-checking.

Individuals can counter disinformation by verifying sources, questioning emotionally charged content, and supporting media literacy initiatives.

A notable example is the 2020 U.S. election, where coordinated efforts between fact-checkers and independent media successfully debunked viral misinformation before it could significantly impact public opinion.

Platforms like Snopes, FactCheck.org, and government agencies provided real-time verification, proving that an informed public can push back against disinformation effectively.

We can’t stop deepfakes from being created, but we can stop them from shaping our reality.

The War for Truth Is Just Beginning

With major elections in countries like the United States, India, and the European Union taking place in 2025,

Slovakia’s case is a warning. AI-powered disinformation isn’t a hypothetical threat—it is already here.

Democracy doesn’t die because of technology. It dies when people stop questioning what they see and hear.

Don’t let someone else decide what you believe. Think critically. Stay vigilant. Because the war for truth is only beginning.

Sources & Further Reading:

- De Nadal, L., & Jančárik, P. (2024). Beyond the Deepfake Hype: AI, Democracy, and "The Slovak Case". Harvard Kennedy School Misinformation Review. Read the full study

- Bloomberg: "Deepfakes in Slovakia preview how AI will change the face of elections."

- The Times: "Was Slovakia’s election the first swung by deepfakes?"

- Reuters Institute Digital News Report 2024: Read the report