- Details

- Written by: ANITNELAV

- Hits: 959

Russian Election Interference in Democratic States: Disinformation Campaigns (Part 1)

Especially since the 2016 US elections, Russian meddling in foreign elections has become a debated topic that has the attention of people all around the world because it poses serious risks to the stability of democracies. The objective of this interference, conducted by groups associated with the Russian government, is to manipulate election outcomes and public opinion in various countries. So, let's look into the ways in which Russia carries out disinformation campaigns with the aim of interfering in elections around the world.

Russian interference strategies typically involve a blend of (1) disinformation campaigns, (2) cyberattacks, and other subversive tactics, such as (3) financial support for specific entities, candidates, or political parties that align with Moscow's interests. These efforts are not just isolated incidents but part of a broader strategy to undermine political cohesion and trust in democratic processes, thus advancing Russia's geopolitical interests. In order to be able to dig deeper into the details, this research will be divided into 3 parts: today, we will focus on disinformation campaigns.

AP News (2024): US authorities have issued warnings that Russia could try to meddle in the upcoming elections scheduled across dozens of countries in 2024. Over 50 nations, representing half of the global population, are poised to hold national elections during this period. Russia is particularly interested in the outcomes of several of these elections, including those for the European Parliament, due to their strategic significance.

As we get closer to the important elections this year, with the main focus on the US, we need to understand the extent and scope of Russian interference efforts.

Disinformation Campaigns as a Core Tactic

Disinformation campaigns are a cornerstone of Russian meddling efforts: creation and distribution of false information to mislead voters, create political unrest, and polarize societies. One of their most dangerous tools consist in social media platforms because fake news spread rapidly among online communities.

Russia is using its espionage network, state-controlled media, and social media platforms to undermine public faith in global election systems, according to a US intelligence investigation that has been shared with around 100 countries (The Guardian, 2023). The assessment states that "Russia is focused on carrying out operations to degrade public confidence in election integrity," drawing attention to the Russian government's deliberate attempt to sway public opinion in a damaging way. We have discussed how Russia uses social media as a weapon here.

Russia has a record of overt and covert strategies to undermine the credibility of elections, according to the intelligence conclusions. In 2020, during an election in an undisclosed European country, members of the Federal Security Service (FSB) were involved in clandestine activities that included threatening campaign workers. In addition, throughout the 2020 and 2021 elections, Russian state media extensively spread bogus accusations of vote fraud throughout several countries, including as Asia, Europe, the Middle East, and South America. The investigation also highlights an instance in South America in 2022 to show how Russia used proxy websites and social media to cast doubt on the legality of elections. The US intelligence community has identified two overarching goals of these Russian operations: first, to undermine democratic societies by sowing discord, and second, to discredit democratic elections and the governments that emerge from them.

As per Kim (2020), under the guise of the Internet Research Agency (IRA), Russian agents have persisted in impersonating American individuals, organizations, and candidates in various political races. Their strategy has been to sow discord by igniting anger, fear, and animosity on all sides of the political spectrum. They have concentrated a lot of their efforts on swing states, specifically targeting certain voter categories with their efforts to discourage them from casting ballots. Nonetheless, the IRA's operations have undergone changes. In time, IRA has become better at impersonating political institutions, such as official campaign logos and branding. They no longer make up fake advocacy groups; instead, they frequently steal the names and logos of legitimate American groups. To further obfuscate their true goals of establishing influence networks, the IRA has expanded their techniques to encompass business accounts and nonpolitical content. More on Russian trolls here. When it comes to addressing contentious matters that split people's ideologies, the IRA largely sticks to the same strategies it used in 2016. As a voter suppression tactic, they employ attacks within the same political parties in an effort to divide the support base of certain candidates. They do this by posing as legitimate political, grassroots, or community organizations, or even the candidates' own names.

In addition to earlier reports, the RAND Corporation has gone into more detail about how Russia's disinformation campaigns are not just about spreading lies, but are also strongly based in reflexive control theory*, a strategy from the Cold War. This method seeks to influence and control public opinion and decision-making processes in the United States and elsewhere. According to RAND (2020), Russian strategies include establishing divisive narratives on social media, utilizing phony online personas, and targeting critical problems that elicit strong emotions from different social groupings in order to sow conflict. This intentional manipulation aims to undermine faith in democratic institutions and processes, reducing societal cohesiveness and strengthening Russia's geopolitical position.

In the run-up to the February 2022 invasion of Ukraine, Russia purposefully used disinformation as a key component of its propaganda tactics. False narratives were central to these attempts: assertions that Ukraine was a Nazi dictatorship, Russia was on a "denazification" mission. Other significant lies spread by Russian media were allegations that Ukraine was conducting genocide against Russian speakers in the Donbas region and working with US labs to develop biological weapons. These accusations were eventually repeated and magnified by extreme right-wing media, including Infowars and the QAnon conspiracy movement, and even reached mainstream audiences via Fox News' Tucker Carlson, resulting in a feedback cycle that supported Russian official propaganda.

Following the invasion, Russia used the notion of 'fact-checking' to disseminate disinformation. Russia has been providing disinformation under the premise of exposing non-existent Ukrainian disinformation via venues such as "War on Fakes," which masquerades as a fact-checking website, leading to an atmosphere of uncertainty and suspicion towards official information.

Targeted Themes in Russian Disinformation

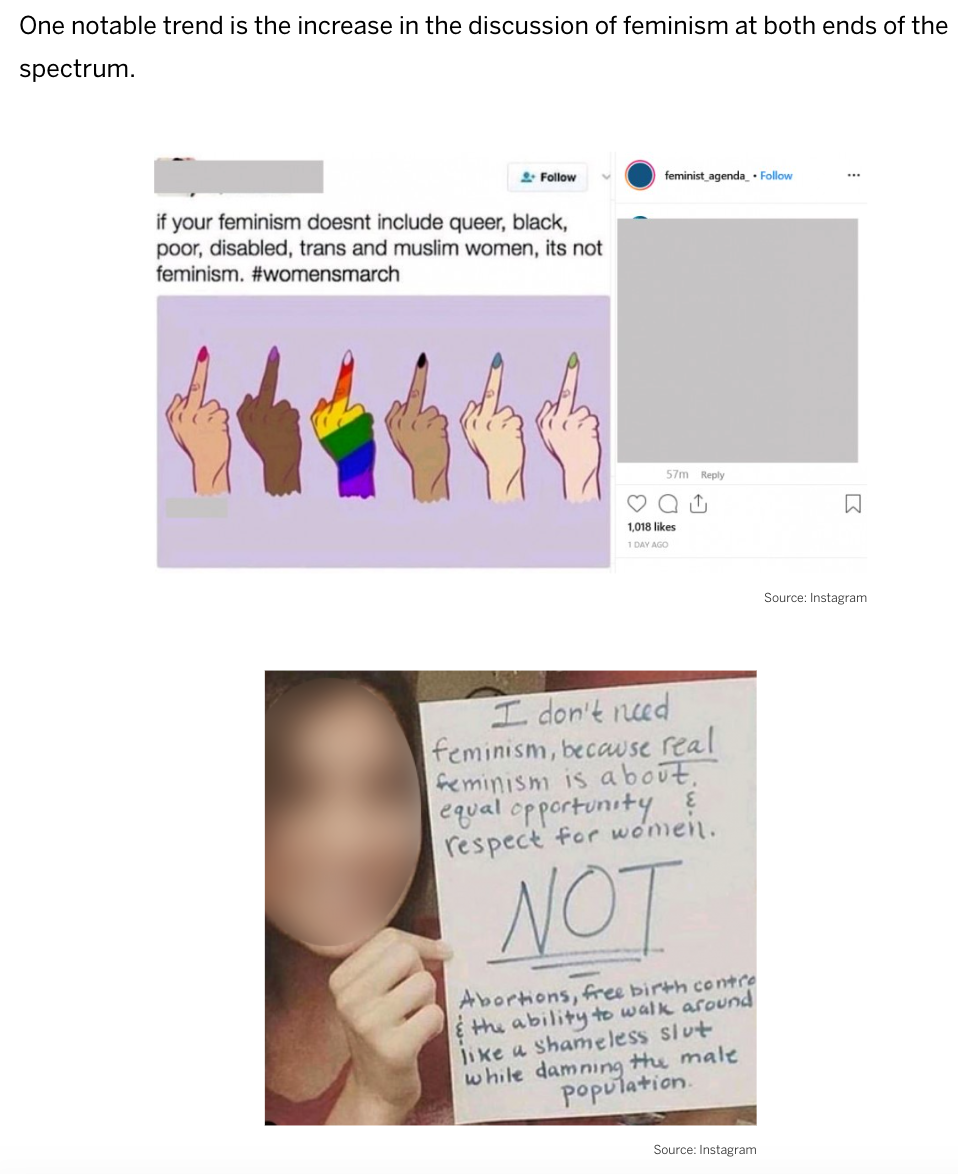

Kim (2020) discovered that the most common topics in Russia’s disinformation campaigns include race, American nationalism and patriotism, immigration, gun control, and LGBT issues. In 2019, the IRA frequently addressed themes such as racial identity and conflicts, anti-immigration sentiments (particularly against Muslims), nationalism and patriotism, sectarian issues, and gun rights in their posts. Moreover, one significant development is the increased debate of feminism at both ends of the spectrum.

Russian disinformation campaigns are strategically designed to interfere in elections and destabilize democratic nations. Taylor (2019), Jenkins (2019), and Pomerantsev (2015) argue that these campaigns serve multiple strategic aims, primarily to preserve Russia's regime by diminishing the influence of the United States and its allies. This strategy involves making Western-style democracy appear less appealing by degrading the global stature of the U.S., creating internal instability, and weakening its alliances with Western countries.

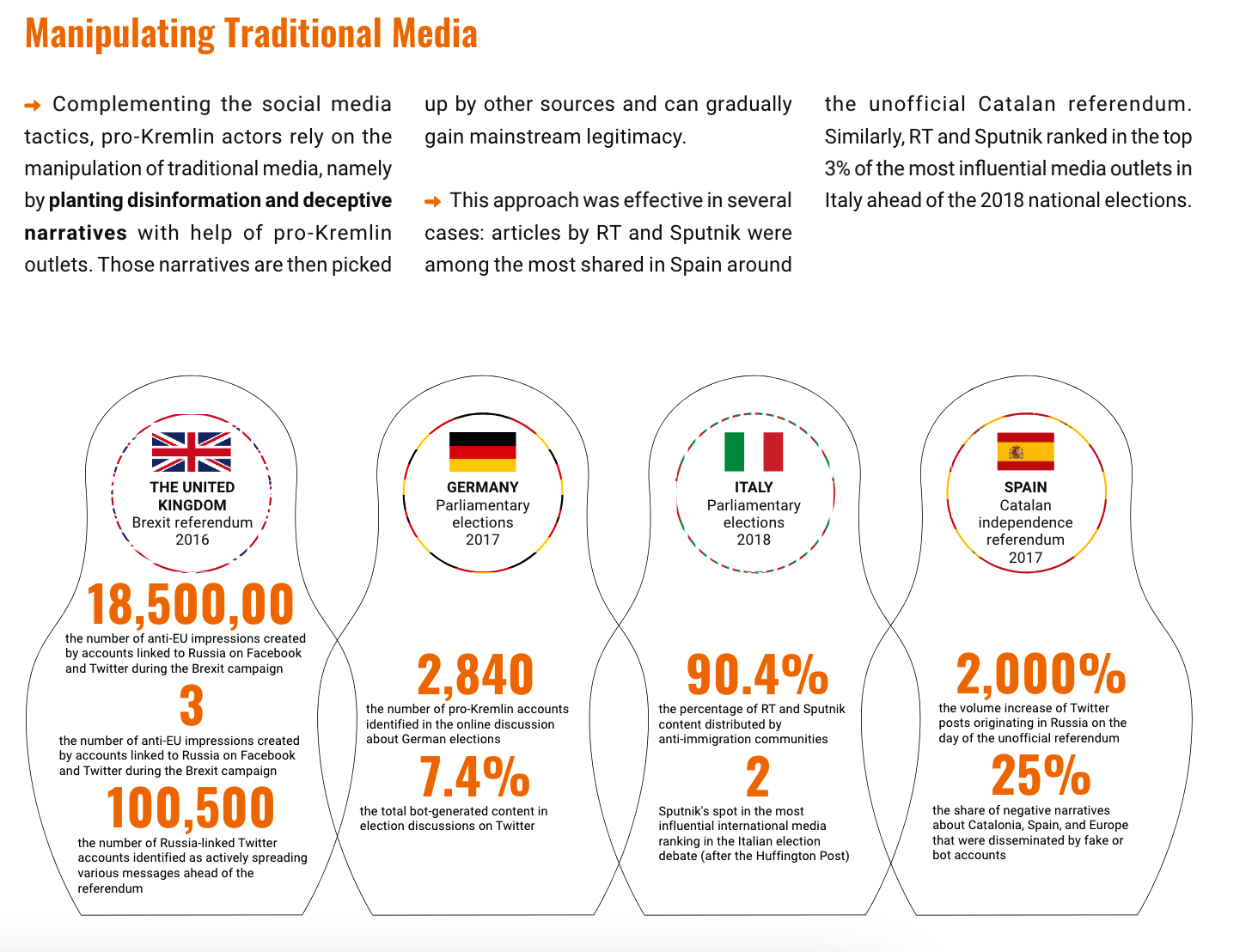

The analysis provided by EuvsDisinfo (2019) outlines three primary classes of disinformation methods used by the Kremlin to manipulate public opinion and interfere in democratic processes worldwide. These methods—disinformation, political advertising, and sentiment amplification—are part of Russia's strategy aimed at undermining democratic institutions, sowing societal division, and distorting public perceptions of reality.

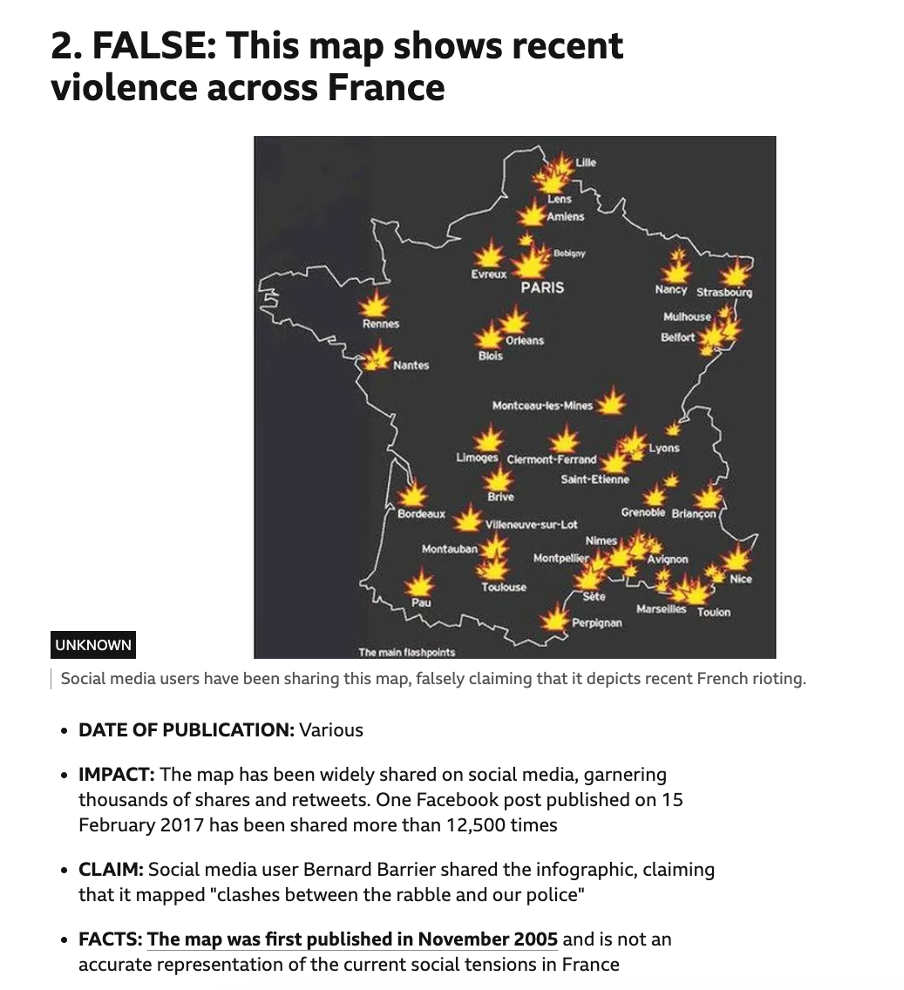

Disinformation

Defined as the fabrication or distortion of news content, disinformation serves to deceive audiences and pollute the information space. This manipulation creates misleading narratives about crucial events or issues, effectively manipulating public opinion. Its objective is not only to influence specific electoral outcomes but also to disrupt the democratic process more broadly by fueling social fragmentation and polarization. Notable instances of disinformation include interference in elections and referenda across various countries, such as the 2016 U.S. elections, the Brexit referendum, and the 2017 French elections. This persistent method has become a viral feature of our information ecosystem, impacting not just during election cycles but continuously affecting the informational landscape.

Political Advertising

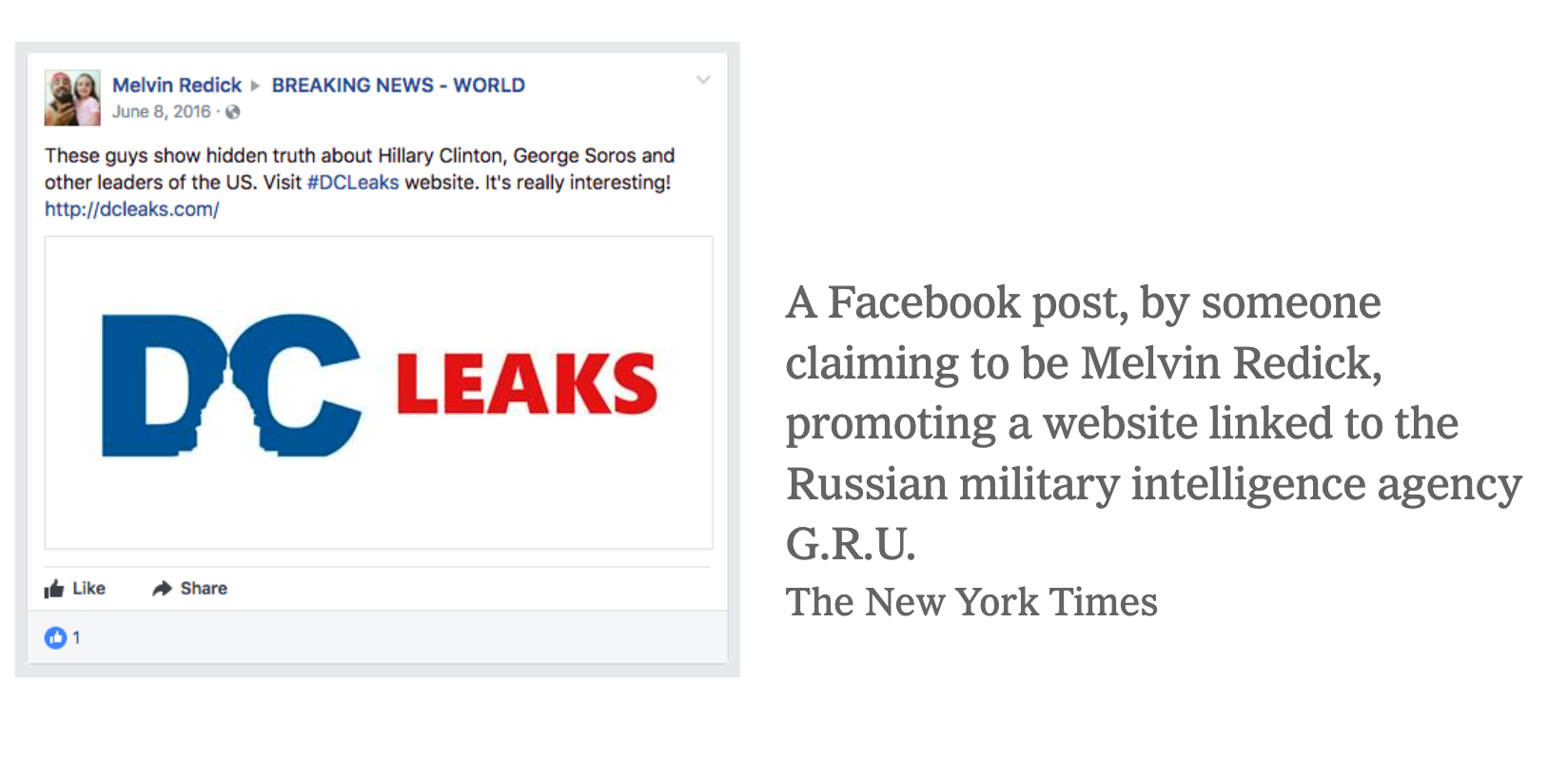

This method involves the use of fake identities or false-front accounts to purchase online political ads, primarily on social media, to propagate disinformation about political entities or issues. The objective here is to artificially manipulate the popularity or unpopularity of political parties, candidates, or public figures, thereby influencing election outcomes. Cases such as the 2016 Brexit referendum and the 2016 U.S. elections highlight how political advertising has been used to sway public opinion and electoral results under the guise of anonymity, complicating efforts to trace and counteract these campaigns.

Sentiment Amplification

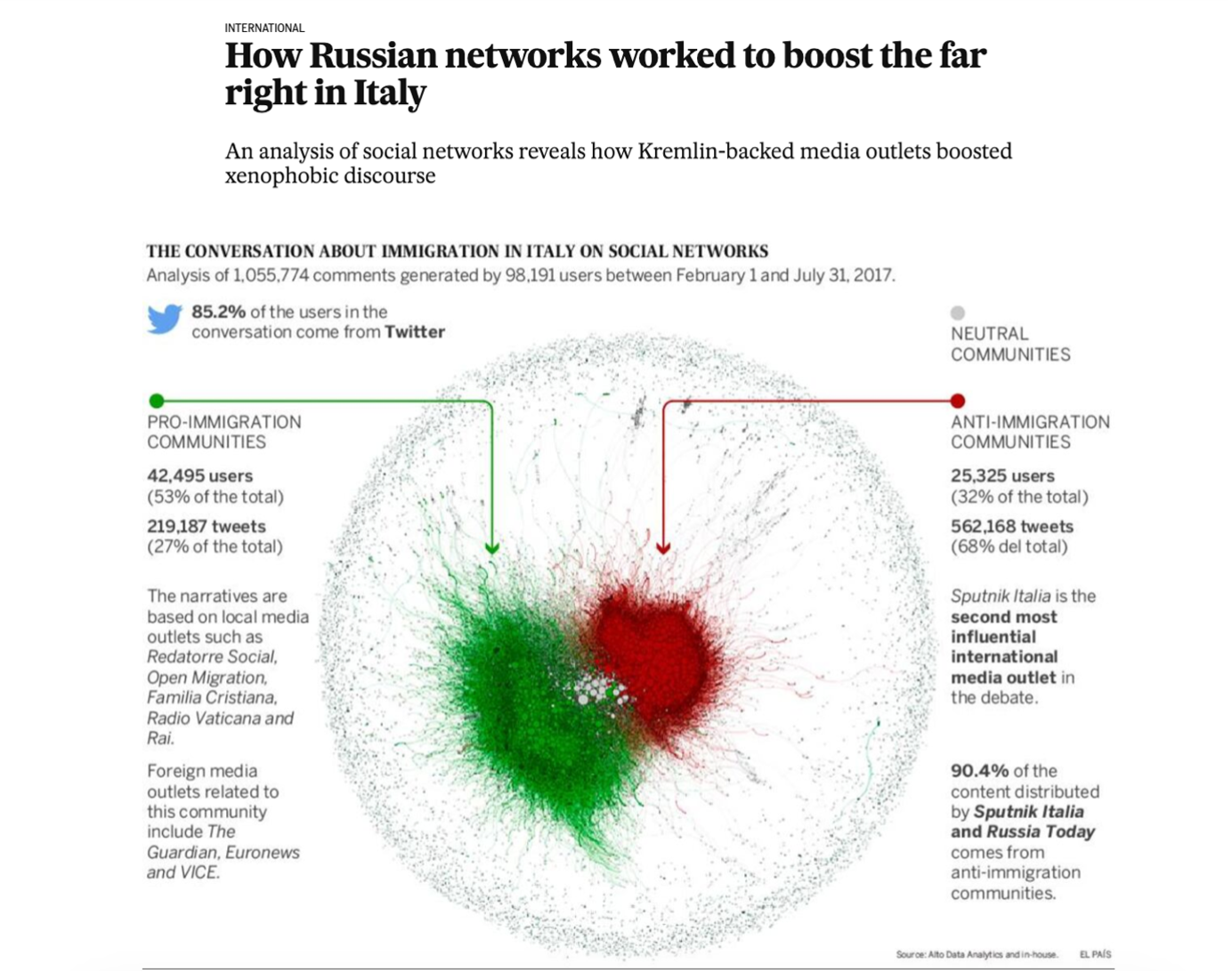

The amplification of sentiment involves the use of fake accounts, trolls, and automated bots to spread disinformation and enhance the visibility of specific narratives. This method is employed both overtly and covertly, with sources either being easily identifiable or obscured to avoid attribution. The primary aim is to increase the proliferation of disinformation, thereby fueling social fragmentation and polarization. It further aims to sow confusion about fact-based realities and erode trust in the integrity of democratic politics and institutions. This technique was notably employed during the 2017 Catalan independence referendum and the 2018 Italian elections, among others.

European Commission Probes Meta for Mishandling Russian Disinformation

According to a recent POLITICO report, the European Commission has initiated investigations into Meta's Facebook and Instagram over their handling of Russian disinformation, particularly in the context of the upcoming European Parliament elections scheduled for June 6-9, 2024. These platforms are suspected of failing to adequately limit the spread of false advertisements, disinformation campaigns, and coordinated bot farms.

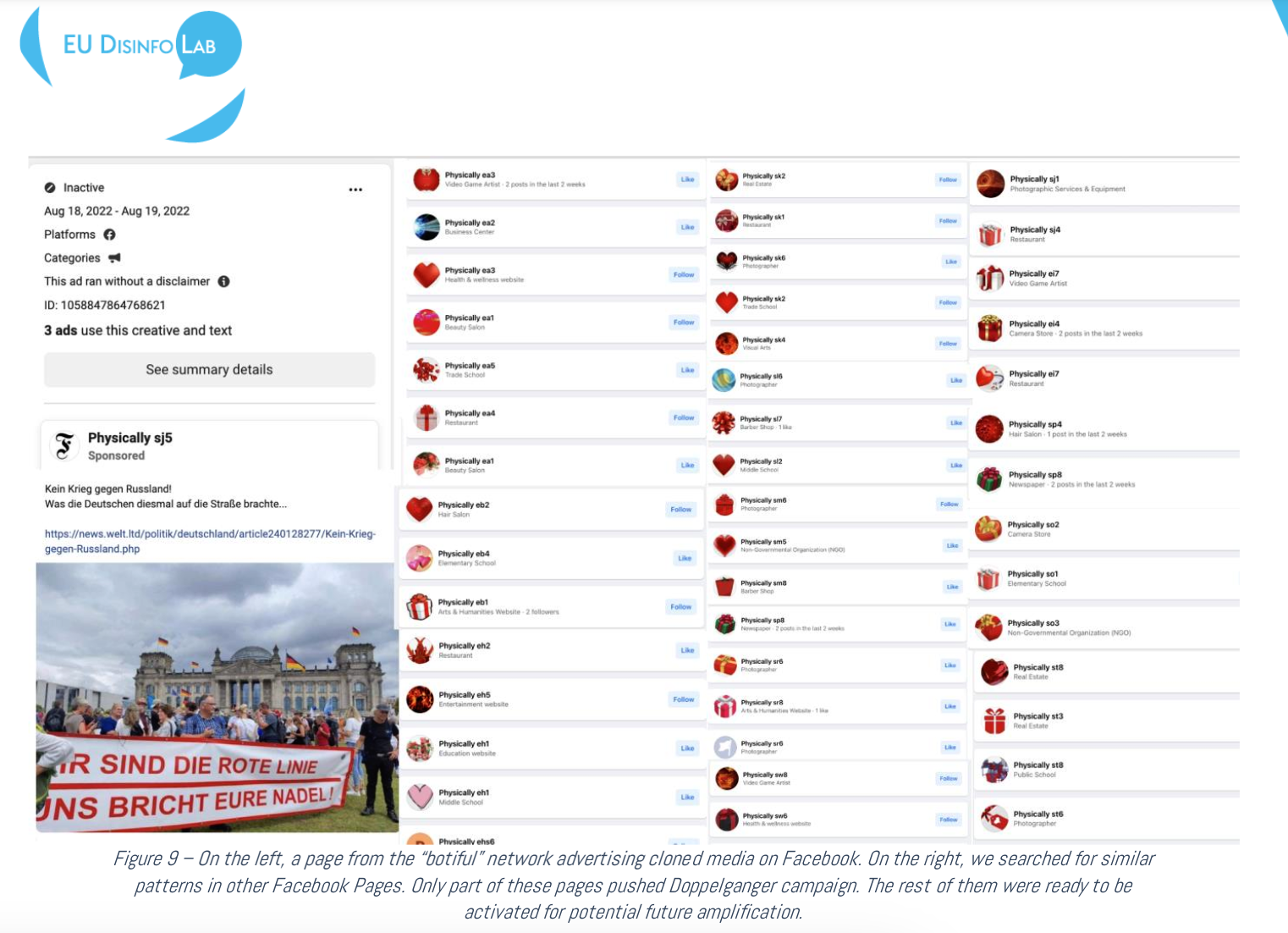

The ministers for European affairs from France, Germany, and Poland raised alarms about ongoing efforts to distort information and deceive voters. Recently, national authorities across Europe have noted an increase in coordinated activities that disseminate narratives antagonistic towards the EU and Ukraine, spreading these through fake news outlets on social media platforms like Facebook and X. A notable Russian campaign, known as Doppelganger, has been particularly active on Facebook. This campaign uses covert advertisements purchased through fake accounts to push narratives favorable to the Kremlin (Politico, 2024).

French European affairs minister Jean-Noël Barrot expressed concern: "We are overwhelmed by Russian propaganda, on a scale which would have been hard to imagine just a few years ago." This sentiment is echoed by concerns from the European Commission that Meta’s lax oversight of its advertising on its platforms renders it a vulnerable target for such foreign manipulations. Speaking under the condition of anonymity, two European Commission officials highlighted the susceptibility of the ad network system to exploitation by international actors. This issue was further supported by findings from the NGO AI Forensics, as shared with POLITICO, which revealed that thousands of pro-Russian advertisements are slipping through unchecked—a clear indicator of Meta's broader struggle to effectively manage advertisers who aim to rapidly influence millions of users globally.

Doppelganger Campaign

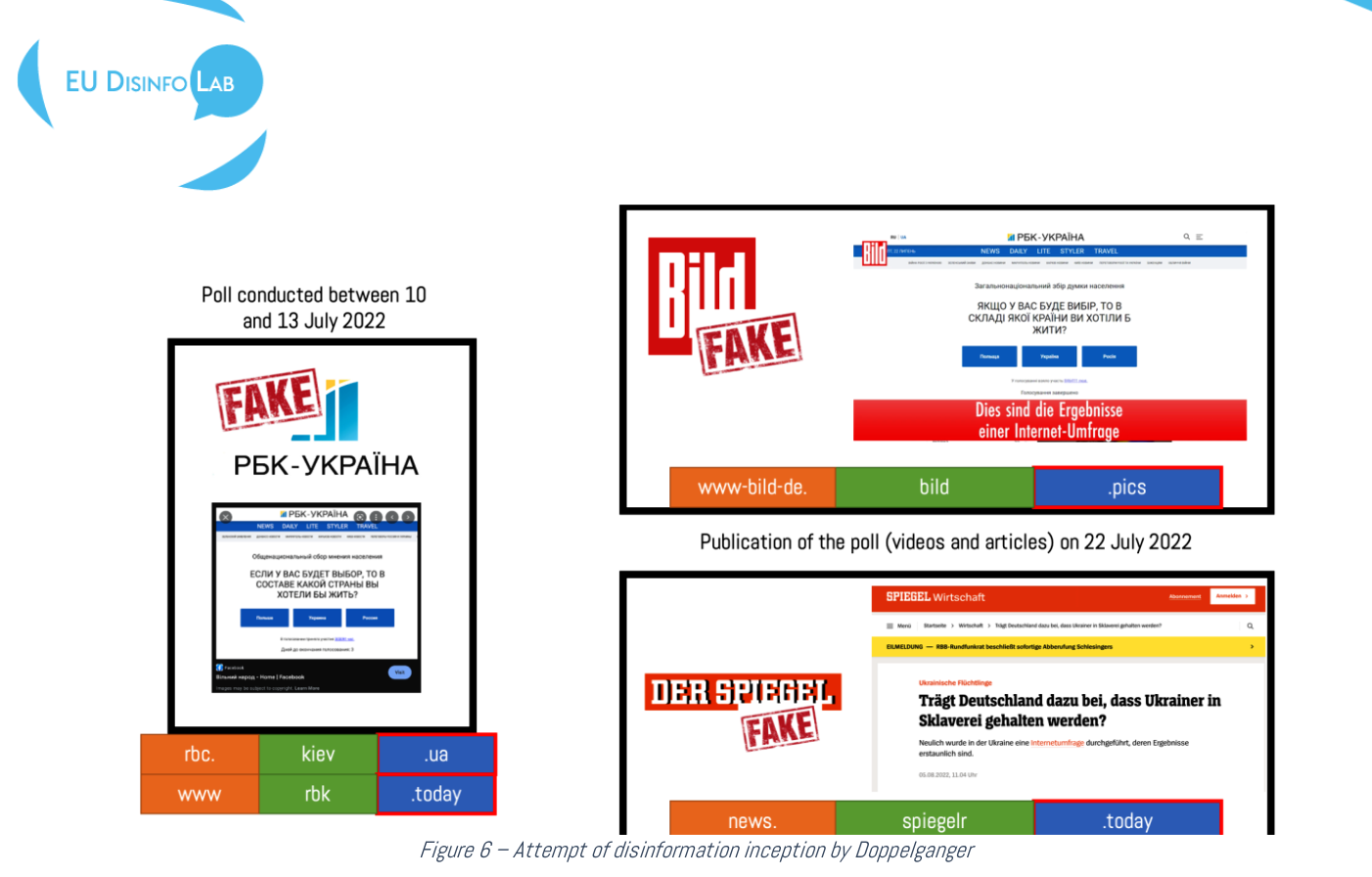

The EU DisinfoLab report details the sophisticated Russian disinformation campaign "Doppelganger," which has been active since at least May 2022. This campaign operates by creating "clones" of legitimate media outlets to spread false narratives and manipulate public perception, particularly concerning the Ukraine conflict and European sanctions against Russia. Doppelganger involves setting up fake websites that mimic the design and domain names of established media outlets, such as Bild, The Guardian, and RBC Ukraine. These sites distribute fabricated articles, videos, and polls designed to mislead the European public. The content typically portrays Ukraine as corrupt, fascist, and failing, while simultaneously promoting Kremlin narratives, such as denying atrocities like the Bucha massacre and spreading fear about the economic impacts of sanctions on European citizens.

The operation employs various sophisticated tactics to enhance its effectiveness and avoid detection:

- Domain Spoofing: The perpetrators purchase internet domain names that are similar to those of genuine media outlets, creating confusion among users.

- Content Fabrication: The sites push out false content that includes professionally made videos and articles, which falsely claim to represent legitimate news sources.

- Social Media Amplification: The campaign utilizes social media platforms like Facebook and Twitter to spread its content more widely. This is supported by networks of fake accounts and pages that promote the disinformation, then are often abandoned, resembling the use of burner accounts.

- Geo-Blocking and Smart Redirections: Advanced techniques like geo-blocking restrict access to content based on the user's location, which helps target specific audiences and avoid broader scrutiny.

- Software Exploitation: The campaign uses European-based software, such as Keitaro, to manage its operations, exploiting the digital infrastructure to maintain anonymity and operational security.

The world has grown increasingly aware of the Kremlin's strategies of electoral disruption since the first discoveries of Russian meddling in the US presidential elections. A new low point in foreign meddling in elections occurred in July 2016 when hackers in Russia released thousands of emails from the Democratic National Committee. Over the years, Russia's strategy for meddling in international elections has changed to take advantage of democratic nations' weaknesses and adjust to shifting geopolitical winds. The Russian government's strategy has shifted from regaining regional influence in its neighbors to focusing on big democracies like the United States and the European Union, where the political power and strategic consequences are far more at risk. Cyberattacks, hack-and-leak operations, and massive online disinformation campaigns were some of the tactics used by Russia in its menacing interference in the 2016 U.S. presidential elections and the Brexit referendum in the United Kingdom, according to a CSIS analysis from 2020. One example of Russia's meddling was the botched coup attempt in Montenegro, which sought to depose the country's pro-NATO government. Russian military operations in Western Europe, Scandinavia, and the Balkans continued despite international condemnation.

It is widely suspected that the Russian government's cyber organization APT28 infiltrated Emmanuel Macron's campaign during the 2017 French presidential elections. The Macron team was unable to respond to the spread of misleading narratives because this attack happened during the crucial no-campaigning time immediately before the election. The event resulted in the bots and hashtags #MacronGate spreading the hacked information widely, while Russian state media sources RT and Sputnik played a key role in spreading derogatory tales about Macron. Furthermore, Russia's effort to sow social unrest in France was demonstrated by the creation of false accounts that promoted anti-immigrant attitudes.

This strategy, called "fire-hosing," was also used against Angela Merkel's government in Germany, a nation where immigration was a major problem. Russian media outlets like Sputnik, NewsFront Deutsch, and RT Deutsch pushed right-wing ideology-aligned narratives, which swayed public opinion and deepened political divisions.

Constant disinformation campaigns sponsored by Russia affected the 2017 and 2018 Italian and Dutch general elections as well as the 2018 Spanish Catalonia independence referendum. Disruptive cyberattacks targeted Swedish newspapers and election-related websites in several countries during the Swedish general election. Additionally, Russia is said to have distributed misleading reports in Sweden through the creation of fake news websites that imitated real ones. Similar to this, Russia's intervention in the elections of Ukraine, Bulgaria, and Macedonia was characterized by the widespread dissemination of false information with the intention of undermining the credibility of the vote and the political process.

There is an ever-present danger to the stability of democratic institutions and processes around the world from Russia's continual employment of these cyberattack and disinformation tactics. The unity and stability of democracies are threatened by these acts, which have dual objectives: influencing election results and dividing citizens.

Russia’s continuous efforts to undermine democracy

The report published by EU vs Disinfo (2019) concludes that over the past six years, Russia's interference in the US political landscape has not only been persistent but has also shown a worrying evolution in tactics and reach. Initially starting small in 2013, by 2016, Russian disinformation campaigns had a substantial organizational structure dedicated to manipulating US voters, complete with over 80 employees and a monthly budget of 1.25 million USD. These efforts peaked in the months leading up to the 2016 presidential election, where the Internet Research Agency (IRA) orchestrated extensive campaigns on platforms like Twitter, YouTube, Instagram, and Facebook, reaching tens of millions in the US.

The strategy was clear: deepen the existing societal divisions across the US by exploiting sensitive issues such as race relations, LGBT rights, gun control, and immigration. Tactics included encouraging African American voters to boycott the elections or follow incorrect voting procedures, provoking right-wing voters towards confrontation, and spreading a broad spectrum of disinformation designed to polarize voters.

Parallel to these social media operations, Russian military intelligence services executed hack-and-leak tactics against the Democratic National Committee, releasing stolen emails and documents to damage Hillary Clinton's candidacy and bolster Donald Trump's campaign. They also deployed fake local news accounts on Twitter aimed at gaining trust for future disinformation efforts. Post-2016, the IRA did not lessen its efforts but instead increased its scope and intensity, targeting not just older voters but also younger demographics and specific ethnic groups, aiming to sow widespread distrust in US institutions.

In Europe, the scope was equally broad, affecting political climates from the Netherlands to Germany. The IRA not only fueled anti-EU sentiments ahead of significant votes like the Brexit referendum and the Dutch EU-Ukraine association agreement referendum but also meddled in the German federal elections by supporting far-right narratives.

The aftermath of these campaigns has seen a response from both social media companies and governments. Twitter and Facebook have undertaken takedowns of IRA-related accounts and content, with millions of posts removed. Additionally, there have been concerted efforts to increase transparency and reduce fake accounts ahead of elections. Despite these measures, the threat of Russian disinformation remains potent, though. The techniques and strategies may evolve, with newer methods such as hacking computing devices to create legitimate-looking social media accounts becoming a new frontier for these disinformation campaigns.

Combating Russian disinfo

To effectively counter Russian disinformation campaigns, we need to make sure our online spaces are safe from hackers and scams, making social media platforms more transparent and accountable, and working together with our allies to fight the disinformation globally.

Fact-checking organizations have an important job of verifying the accuracy of news stories and debunking false information. They play a crucial role in ensuring that the public receives reliable and trustworthy news; diverse media ecosystems are beneficial because they reduce the risk of information monopolies. When there are multiple sources of news and information, it becomes more challenging for disinformation to spread unchecked. In addition, initiatives from civil society and efforts in public diplomacy can help increase awareness about disinformation and encourage media literacy. This empowers individuals to analyze information sources critically.

Media literacy education is important for the public to learn how to distinguish between disinformation/propaganda and reliable sources. By teaching people how to think critically and verify claims, we can create a stronger society that is less easily influenced by misleading information.

As we move forward, we must remain vigilant. The history of Russian interference highlights a clear intent to destabilize democratic processes and sow discord among democratic states’ citizens. To protect our elections from foreign interference, we must respond to this persistent challenge with unity and resilience.

Stay tuned for Part Two of this series, where we'll explore the specific cyberattack strategies used by Russia—another important aspect of their attempts to influence global politics by interfering in democratic states’ elections.

References for my research:

https://www.theguardian.com/world/2023/oct/20/russia-spy-network-elections-democracy-us-intelligence

https://www.brennancenter.org/our-work/analysis-opinion/new-evidence-shows-how-russias-election-interference-has-gotten-more

https://www.fivecast.com/blog/disinformation-campaigns-identifying-russian-disinformation/

https://www.politico.eu/article/facebook-and-instagram-hit-with-eu-probes-over-russian-and-other-foreign-countries-disinformation/

https://www.disinfo.eu/wp-content/uploads/2022/09/Doppelganger-1.pdf

https://apnews.com/article/russia-election-trump-immigration-disinformation-tiktok-youtube-ce518c6cd101048f896025179ef19997

https://euvsdisinfo.eu/uploads/2019/10/PdfPackage_EUvsDISINFO_2019_EN_V2.pdf